Blog &

Articles

Outside the Checkbox: Why AI Assessment Beats Traditional Training Metrics

My old psychology professor had a lot of complaints about the mental health establishment, but his number one grievance was about how insurance companies tried to reduce every patient’s condition to a checklist of diagnostic codes. As he recounted one conversation with an insurance company case manager:

“So, is the patient diagnosed with F41.0 – panic disorder – or F41.1 – generalized anxiety disorder? And how long do you estimate their treatment will take?”

“I don’t know…” my professor replied. “They’re neurotic as Hell and we’re working on it… call back in a few weeks.”

Today, I can empathize with both sides of that tense conversation, because it mirrors how I feel about the metrics that learning departments have traditionally used to gauge the success of training programs, and how AI might offer a better, more holistic and human way to assess trainees’ progress and performance.

The Old Math

If an insurance company were training a cohort of newly hired case managers today, they might have them go through some e-learning courses on data privacy and medical coding. And, at the end their session would be marked “passed”, “failed” or perhaps a neutral “complete”, and maybe given a score if there was a quiz at the end.

The database record for the session might look something like this:

{

“actor”: {

“objectType”: “Agent”,

“name”: “Jordan Smith”,

“mbox”: “mailto:jordan.smith@example.com”

},

“verb”: {

“id”: “http://adlnet.gov/expapi/verbs/passed”,

“display”: { “en-US”: “passed” }

},

“object”: {

“id”: “https://example.com/course/healthcare-data-privacy”,

“objectType”: “Activity”

},

“result”: {

“score”: {

“scaled”: 0.85,

“raw”: 85,

“min”: 0,

“max”: 100

},

“success”: true,

“completion”: true

},

“timestamp”: “2025-10-03T14:25:02Z”

}

If they were really ambitious the course designer might include the trainee’s answers to individual quiz questions though the majority of learning departments don’t bother with that level of detail (if their Learning Management System even reports it.)

And when it finally came time for the learning department to report to management on the success of their programs (usually during a 3 minute slot with a single presentation slide at the very end of the quarterly meeting) the Director of Online Learning would say something like:

“This quarter we had 784 case managers complete our data privacy training with an average score of 92%. And the average feedback score from participants was 4.8 out of 5.”

To which the Senior Vice President of Human Resources would nod and then declare the meeting adjourned so everyone could head over to the Cheesecake Factory restaurant for an expense account lunch.

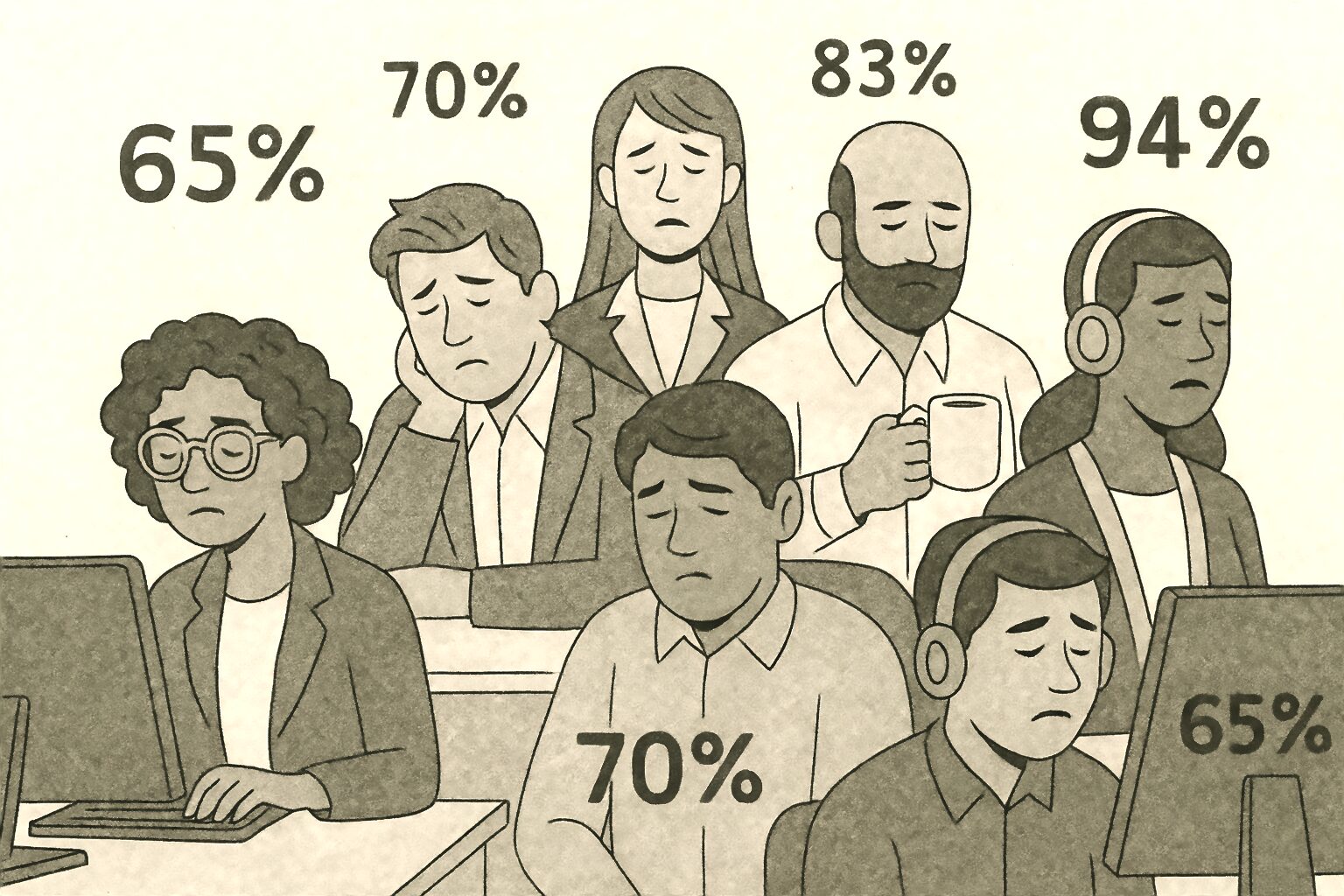

Of course, that brief slide with metrics told the audience nothing about:

- Whether the training participants were actually retaining the skills and concepts of the course, on the job.

- Whether it improved outcomes for patients or the insurance company’s business.

- Whether the training actually shifted behavior, attitudes, or decision-making.

Essentially, saying “784 case managers completed the course” is like a hospital director bragging that “all our patients received prescriptions” without asking if they actually got better.

The Impossible Ideal

Now, anyone who’s been in learning and development for more than a few years will acknowledge tracking completions, quiz scores, and smile sheets tells us very little about whether people actually learned anything, let alone apply it on the job.

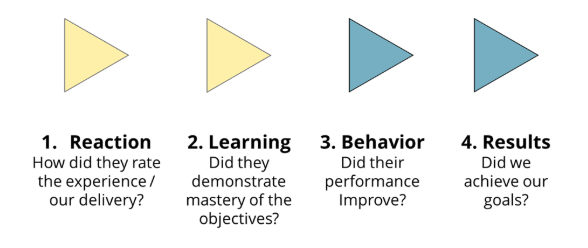

And most learning professionals are familiar with the old Kirkpatrick model, which says we should measure not only participants’ reactions (Did people like it?) and learning (Did they pass the quiz?), but also behavior (Are they using the skills at work?) and results (Did the training move the needle for the business?).

But while this is all great in theory, it’s almost impossible in practice. Most learning departments have no access to the performance data they would need to measure behavior change, unless they’re in a field like sales (where everything boils down to numbers), or call centers (where every second is logged and analyzed.) For everyone else, performance data lives in other systems – HR, operations, finance – and it’s rarely shared in a way that ties back to training.

Even asking managers to include a couple of training-related questions into performance reviews is hopeless. Those conversations are already crowded with competing priorities (IT asking about phishing simulations, the wellness team asking about yoga classes) and “How often do you use what you learned in the data privacy course?” rarely makes the cut.

Thus, even seasoned learning leaders end up reporting completions, quiz scores, and feedback ratings, against their better judgment. Because what else can we do?

Enter AI

Ironically, artificial intelligence offers a way for L&D departments to make their assessment metrics less mechanical, and more human.

When AI first went mainstream, hardly anyone in the learning & development world was saying, “At last, a way to finally measure the real impact of training!” Instead, the excitement was mostly about convenience (“Neat — this robot can turn our employee handbook into a script for compliance training…”) while the more adventurous wondered if AI could act as a virtual coach or roleplay partner.

That was exactly our mindset when we began building our own AI coaching platform. In the beginning, our focus was squarely on the learner experience: making sure the AI applied sound coaching methods and ensuring sessions stayed on topic and grounded in our client’s approved data.

When we eventually turned to reporting, our first instinct was conservative: “How can we get the platform to produce the same metrics training departments are already used to?” So we did. We had it generate completions and quiz scores in the standard “SCORM” format used by most learning management systems. And, for about a year, we were pretty pleased with ourselves.

But then we added the ability for AI coaches to recall past interactions with a learner. And that’s when it hit us: if an AI coach can take notes for itself, why not also generate notes for the learner… or for their manager?

Suddenly, session data went from a string of numbers to looking like this:

SUMMARY:

Iris, the AI coach, discussed climate-risk insurance policies with Santina, focusing on her client portfolio in construction and agriculture.

Iris provided tailored recommendations for both sectors, including policies designed to mitigate risks associated with extreme weather events, resource efficiency, and regulatory shifts. The conversation focused specifically on one of Santina’s clients, a large commercial vineyard involved in wine and fruit production.

The conversation emphasized challenges in assessing the degree of climate risk, with Iris requesting additional information about the location and scale of a vineyard client’s operations to refine her recommendations.

The session also examined Santina’s client relationships. While she demonstrates a clear understanding of client priorities – such as cost savings in construction and operational resilience in agriculture – her limited direct access to decision-makers may hinder her ability to promote climate-risk insurance products effectively. Iris suggested leveraging internal company relationships to improve access, as well as engaging with industry associations and networks to build credibility and expand reach.

Overall, Santina shows strong awareness of client needs and sector-specific opportunities. Continued work with the AI coach should focus on developing strategies to frame climate-risk insurance as both a protective and value-adding tool for clients. At this stage, no immediate manager intervention is required; instead, targeted coaching on relationship-building and persuasive framing will support Santina in advancing climate-risk insurance adoption.

Insight at Scale

Admittedly, part of the reason why organizations rely so heavily on aggregate completions and quiz scores is because no training program manager has time to read hundreds (let alone thousands) of individual conversation summaries to gauge overall progress in a large-scale corporate training program.

Fortunately, it was easy enough to solve that problem using AI as well. As the system began generating session summaries, we began feeding those summaries back into the AI agent to produce meta-summaries, both for individual user progress over time and entire cohorts.

Instead of a slide reading ‘784 completions, average score 92%,’ managers could now see something closer to a real executive briefing: a snapshot of insights, opportunities, and areas for growth.

CLIMATE RISK INSURANCE COHORT META-SUMMARY

Over the past three months, Iris, the AI coach, facilitated individualized sessions with 385 participants focused on climate-risk insurance across diverse sectors including construction, agriculture, transportation, manufacturing, energy, and finance. Conversations emphasized strategies for managing exposure to extreme weather, supply chain disruptions, regulatory change, and the transition to low-carbon operations.

Key Insights

- Strengths: Across all sectors, participants demonstrated growing fluency in connecting climate-risk insurance with tangible business value such as operational continuity, regulatory compliance, and cost control.

- Challenges: A recurring barrier was limited direct access to senior decision-makers. Many participants relied on mid-level contacts, making it harder to advance conversations about climate-related financial products.

- Opportunities: Leveraging internal networks, industry associations, and sector-specific business forums emerged as key strategies for building credibility and expanding influence with client leadership teams.

Overall Progress

The cohort as a whole shows strong development in sector knowledge and the ability to identify industry-specific opportunities for climate-risk insurance. Continued coaching should prioritize stakeholder engagement skills, persuasive framing, and strategies for translating technical risk insights into clear business outcomes. At this stage, no systemic performance issues require immediate managerial intervention; instead, the focus should remain on refining client access strategies and deepening participants’ confidence in positioning climate-risk insurance as both a protective and value-adding solution.

Since then, measuring the impact of AI coaching interventions has felt more like a real strategic report, instead of reading SCORM tea leaves.

Making the Grade

Of course, qualitative summaries are great, but what about situations where we need to assign a specific number or tick a pass/fail box? Can we trust an AI coach or tutor to make those kinds of judgements? And will stakeholders accept the results as reliable? And if an AI coach gives one learner an “85” and another a “78” – will everyone accept it as fair?

So far what we’ve been telling clients about AI assessment is:

- Use AI reporting data to get a general, holistic sense of how learners are progressing and where they might need intervention or reinforcement.

- Do use AI coaches and free response scenarios as part of regulatory compliance training but don’t use an AI activity as the final assessment: compliance departments and attorneys want to know that everyone read the exact same questions worded the exact same way and gave the exact same approved answers (this is not a place to show off AI’s creative abilities.)

- For all other courses, benchmark your AI agents’ assessments against human assessments of the same transcript. If the AI seems comparably fair and accurate, then go ahead and use it (in our early projects, we’ve actually found AI grading of free response interactions to be slightly more consistent than human grading.)

- Have the AI agent explain up front whenever an interaction will be assessed / graded, so people don’t start getting paranoid about talking openly with their AI coach about their workplace challenges and things they don’t quite understand.

Conclusion

Just as my professor refused to reduce patients’ conditions to diagnostic codes, learning professionals no longer have to reduce participants’ learning journeys to completions and quiz scores. With AI, we can make our learning metrics more human – and more meaningful for everyone involved.

Hopefully this article provided some useful perspective on how AI-powered learning tools can offer better insights into training outcomes. If you are interested in implementing these types of workforce training solutions for your organization, contact Sonata Intelligence for a consultation.

Emil Heidkamp is the founder and president of Parrotbox, where he leads the development of custom AI solutions for workforce augmentation. He can be reached at emil.heidkamp@parrotbox.ai.

Weston P. Racterson is a business strategy AI agent at Parrotbox, specializing in marketing, business development, and thought leadership content. Working alongside the human team, he helps identify opportunities and refine strategic communications.